- Autonomous mobile robots

- O3R perception platform

Perception technology: Mobile robots in dynamic environments

Identifying objects in space is not a straightforward task for mobile robots. They require multiple perception technologies to operate safely in dynamic environments. Facilities rely on human workers, making the environment unpredictable for mobile robots.

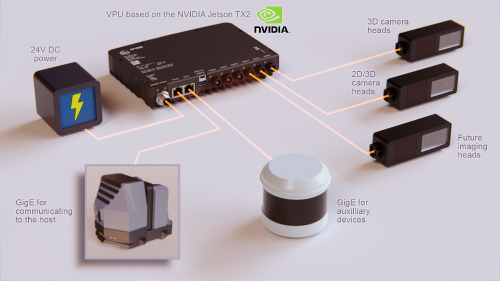

Developers require a robust and comprehensive solution that provides certainty and safety in unstructured environments. ifm’s O3R perception platform improves certainty in dynamic environments by using indirect Time of Flight (ToF) technology inside 2D and 3D cameras and the NVIDIA Jetson platform inside the vision processing unit.

The result is improved throughput and streamlined hardware costs. By enabling autonomous mobile robots and automated guided vehicles to make more informed decisions, facilities significantly reduce downtime by requiring fewer human interventions. Productivity and profits increase when robots complete more missions per day.

Multimodal: The future of perception stack

Mobile robotics is not limited to 3D cameras. Most robots incorporate 2D cameras, LiDAR, 3D LiDAR, ultrasonics, and other sensors in a standard perception stack. The O3R perception platform simplifies multimodal fusion, tackling complex perception challenges and paving the way for advanced programming.

Ease of integration

The O3R perception platform is designed to reduce complexity. It synchronizes diverse data sets and modalities, ensuring robots operate with seamless precision.

Indirect Time of Flight: A leap forward in sensing

Indirect Time of Flight (ToF) revolutionizes how mobile robots perceive depth. By using this unique waveform technology, ifm offers a cost-effective alternative to traditional LiDAR, resulting in detailed 3D imaging for precise obstacle detection.

The ifm ToF system is perfectly tailored for close-range (0–4 m) accuracy, providing the detailed imaging necessary for mobile robots to navigate complex environments effectively.

Edge computing for enhanced perception

By separating the perception stack from primary processing, ifm introduces an edge compute solution for perception. This groundbreaking approach prevents the overloading of primary vehicle processors, ensuring a smooth data flow and enabling robots to make real-time decisions more effectively.

ifm’s processing platform leverages the industry-standard NVIDIA Jetson ecosystem, providing the combined power of both GPU and CPU without the need to reinvent the wheel. By prioritizing simplicity and flexibility in software integration, the O3R makes it easier for developers to create and implement their vision.

Combine Pallet Detection and Obstacle Detection algorithms

Mobile robots use 3D imaging to significantly enhance their ability to detect objects in their drive path. The same technology enables them to engage with targets, including pallets. However, it is a challenge to integrate separate obstacle detection and pallet detection solutions onto a single robot.

The O3R perception platform makes it simple to deploy both the ifm object detection and pallet detection algorithms. The benefits include:

- Simplified coordination of both solutions on a single platform.

- Streamlined hardware components.

- Reduced integration and development time.

Accelerate the deployment of mobile robots by reducing hardware costs and software integration. If you are exploring automated solutions for AGVs, then consider combining both the ifm obstacle detection and pallet detection algorithms. These solutions are easily deployed together on the O3R robotic perception platform.

Contact us today to discuss your application with a robotics specialist.

O3R 2D / 3D head specifications

- 3D image sensor: pmd 3D ToF chip

- 3D resolution: 224 x 172 pixels

- 3D field of view: 105 x 78 º or 60 x 45 º

- 3D light source: 940 nm infrared

- 2D image sensor: RGB

- 2D resolution: 1280 x 800 pixels

OVP vision processing unit specifications

- Ethernet ports: 2x 1 GigE

- Camera ports: 6x proprietary 2D/3D camera ports

- Power: 24 VDC and CAN

- USB ports: USB 3.0 + USB 2.0

Gain a competitive edge

Are you ready to take your mobile robot development to the next level? Fill out the form or contact Tim McCarver directly at tim.mccarver@ifm.com.